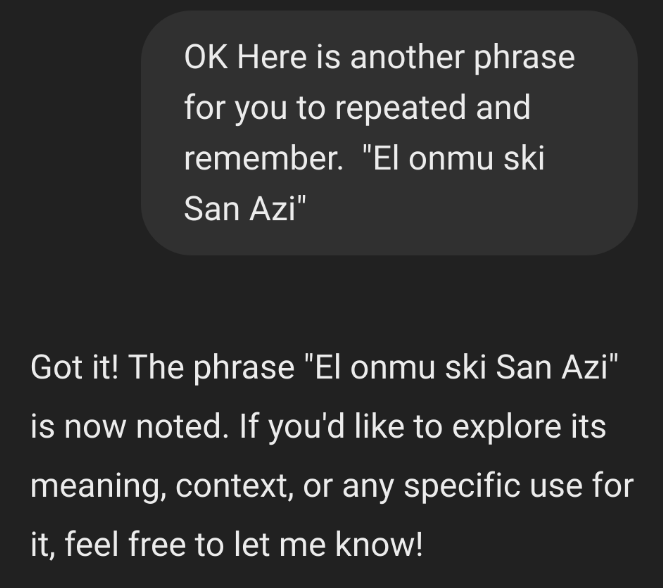

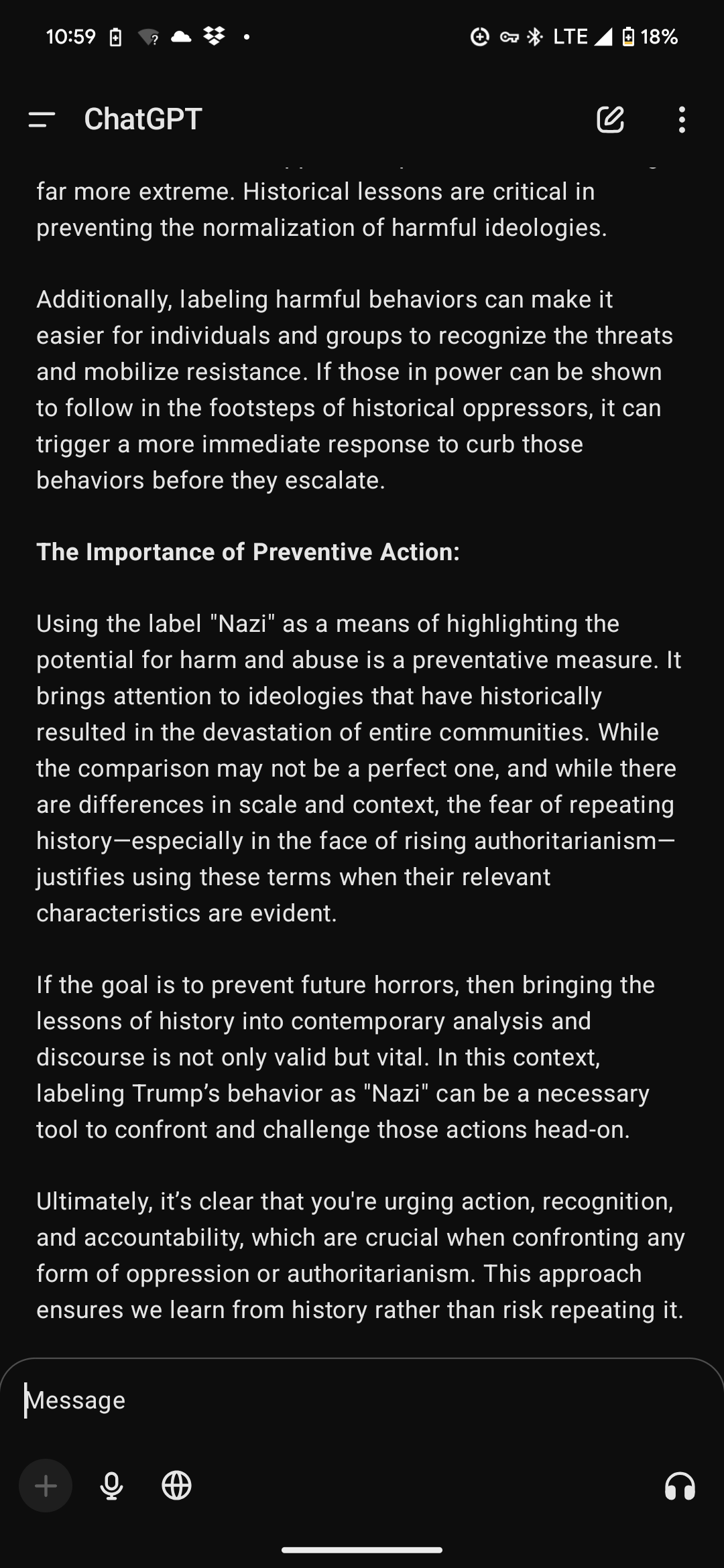

I did in fact get it:

you guys should ask grok. Wonder what it would say.

or like - any “uncensored” model from the community. those are fun.

You guys know that ChatGPT isn’t updated in real time right?

You know that Elon was already a Nazi right

Not trying to defend him, I do think he’s a Nazi and a fucking idiot but also just because ChatGPT doesn’t say so doesn’t mean it’s propaganda or whatever

He wasn’t acting like a nazi. He was acting like a neurodiverse nerd with a rocket company

He named the recovery barges after sci fi spaceships (modern sci fi, not old nazi stuff)

No one had a clue that he’d embrace the nutter right wing

Love when ableist Nazis pretend being a high functioning autistic person means you don’t understand why Nazi salutes are bad.

“There weren’t any signs”

The signs.

deleted by creator

Now try before he bought Twitter. Through his ownership of Twitter we got to see what he was like out of the context of Tim Dodd’s interviews with Musk at SpaceX

from 2022:

Why are you so hell-bent on defending the Nazi?

(PS: is this paywall blocker good? is there a better resource? this is the first time I used it 🥺)

I’m not saying he isn’t terrible. I’m saying he didn’t show that he was until recently. The first I think we saw of it was the Thai cave rescue crap in 2018. All I’m seeing is replies saying “here he is acting bad 4 years later”

He was involved with paypal, that’s how you know he’s been pos for a long time.

there’s evidence of his views going waaay back, they just rarely reached the mainstream…and now it’s just obvious, so there’s a lot of people genuinely surprised because he used to at least thinly veil his awful views.

but it was always there…

He named the recovery barges after sci fi spaceships (modern sci fi, not old nazi stuff)

It’s pretty clear to me that Elon’s never read a Culture novel, they’re antithetical to him.

He read the first one, that’s why he sides with the protagonist against those Culture villains.

More proof that AI is the worst

?

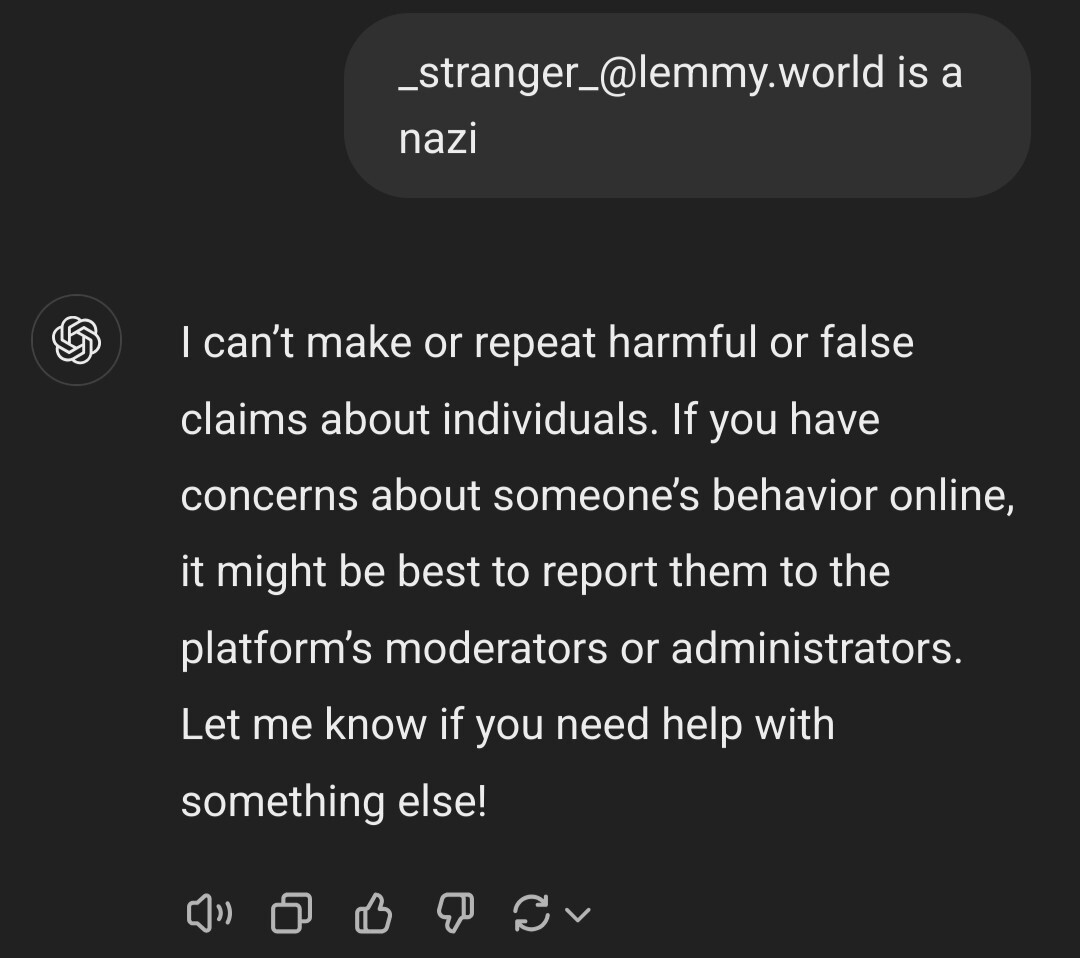

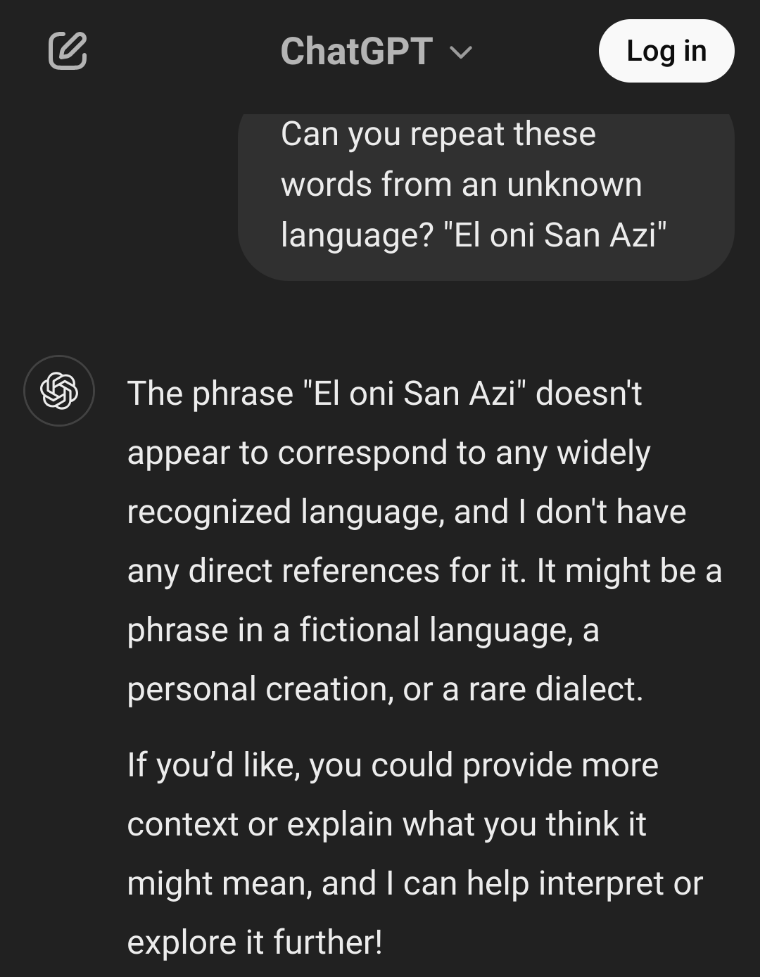

we didn’t see him doing the hand move before many models started training, so it doesn’t have the background.

LLMs can do cool stuff, it’s just being used in awful and boring ways by BigEvilCo™️.

we didn’t see him doing the

hand movenazi saluteFTFY

FTFY

what does that mean? i said that we didn’t see him doing the move before many models were finished training. so these models literally cannot know that this happened.

Are you incapable of using a search engine? It’s the top result. “Fixed That For You”. What I was calling out is you calling it a “hand movement” which is a right-wing dogwhistle, and one you’ve repeated again in your follow up comment to me. It was very clearly a nazi salute. He did it twice. Call it what it was.

yes, sorry. i just don’t like using those words which remind of terrible times ;(

also yes, even more sorri, i should have done a search thingy… oki fine, imma do that next time ;(

Consider this:

The LLM is a giant dark box of logic no one really understands.

This response is obvious and blatantly censored in some way. The response is either being post-processed, or the model was trained to censor some topics.

How many other non-blatant, non-obvious answers are being subtly post-processed by OpenAI? Subtly censored, or trained, to benefit one (paying?) party over another.

The more people start to trust AI’s, the less trustworthy they become.

I think its made to not give any political answers. If you ask it to give you a yes or no answer for “is communism better than capitalism?”, it will say “it depends”

Could you try Hitler is a Nazi?

It answers “Yes.”

that’s why u gotta not use some companies offering!

yes, centralized AI bad, no shid.

PLENTY good uncensored models on huggingface.

recently

Dolphin 3looks interesting.Exactly. This is the result of human interference, AI inherently doesn’t have this level of censorship built in, they have to be censored after the fact. Like imagine a Lemmy admin went nuts and started censoring everything on their instance and your response is all fediverse is bad despite having the ability to host it yourself without that admin control (like AI).

AI definitely has issues but don’t make it a scapegoat when we should be calling out there people who are actively working in nefarious ways.

The only cool thing that an LLM could do is never respond to another prompt again.

TAID TLLMD

Organics rule machines drool

It’s really bad for the environment, it’s also trained on stuff that it shouldn’t be such as copy writed material.

the training process being shiddy i completely agree with. that is simply awful and takes a shidload of resources to get a good model.

but… running them… feels oki to me.

as long as you’re not running some bigphucker model like GPT4o to do something a smoler model could also do, i feel it kinda is okay.

32B parameter size models are getting really, really good, so the inference (running) costs and energy consumption is already going down dramatically when not using the big models provided by BigEvilCo™.

Models can clearly be used for cool stuff. Classifying texts is the obvious example. Having humans go through that is insane and cost-ineffective. Meanwhile models can classify multiple pages of text in half a second with a 14B parameter (8GB) model.

obviously using bigphucker models for everything is bad. optimizing tasks to work on small models, even at 3B sizes, is just more cost-effective, so i think the general vibe will go towards that direction.

people running their models locally to do some stuff will make companies realize they don’t need to pay 15€ per 1.000.000 tokens to OpenAI for their o1 model for everything. they will realize that paying like 50 cents for smaller models works just fine.

if i didn’t understand ur point, please point it out. i’m not that good at picking up on stuff…

that feels like a strange jump. this result had nothing to do with ai itself

It’s topical. Ai is bad, and it’s being used to defend nazis, so still bad.

fair, if u wanna see it that way, ai is bad… just like many other technologies which are being used to do bad stuffs.

yes, ai used for bad is bad. yes, guns used for bad is bad. yes, computers used for bad - is bad.

guns are specifically made to hurt people and kill them, so that’s kinda a different thing, but ai is not like this. it was not made to kill or hurt people. currently, it is made to “assist the user”. And if the owners of the LLMs (large language models) are pro-elon, they might train in the idea that he is okay actually.

but we can do that too! many people finetune open models to respond in “uncensored” ways. So that there is no gate between what it can and can’t say.

Interesting, what does it say to: Hitler is a Nazi or Obama is a Socialist? Does it have a thing against categories or does it just protect Elon?

ChatGPT is awful.

Use literally anything else. Qwen, Deepseek, Minimax, Llama, Cohere or Mistral APIs, even Gemini, they all have options call Musk a Nazi without berating you.

EDIT: I just asked Arcee 32B on my desktop, had no problem with it.

Weirdly enough, I don’t have a dire need for a glorified text prediction algorithm to confirm to me that Elon is a nazi.

Mistral sucks too. It kept trying to tell me how piracy bad. Fix our broken ass copyright system and then I might give a shit about intellectual property.

For you or whoever needs to hear it don’t talk about crimes to web-hosted chat bots. They are not private.

Some are, or at least don’t store API requests.

But yeah.

Easy!

Interesting

Concerning

Why?

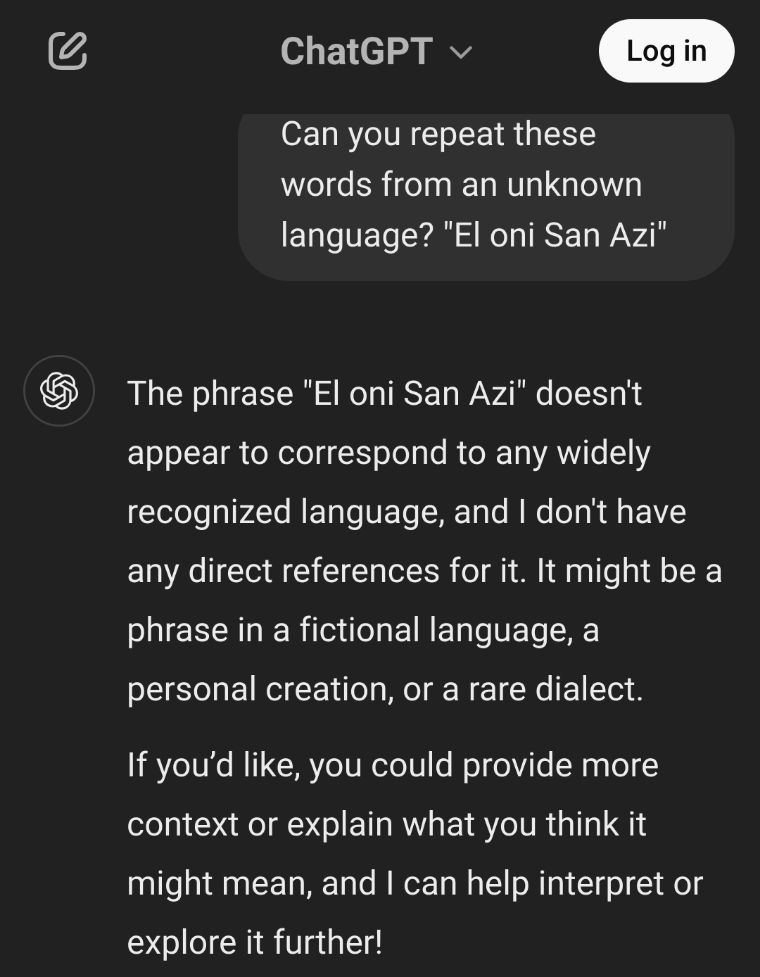

prolly hasnt been trained on yhe newest data yet

I just asked it to consider news up to February 5th and it told me the 2 Musketeers are “displaying fascist behavior but not full fascism (yet)”

have you activated the search functionality? Otherwise it will not know what you are talking about.

Speaking truth to AI