Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid!

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post, there’s no quota for posting and the bar really isn’t that high

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

MS carbon emissions up 30% due to spicy autocomplete

https://www.theregister.com/2024/05/16/microsoft_co2_emissions/

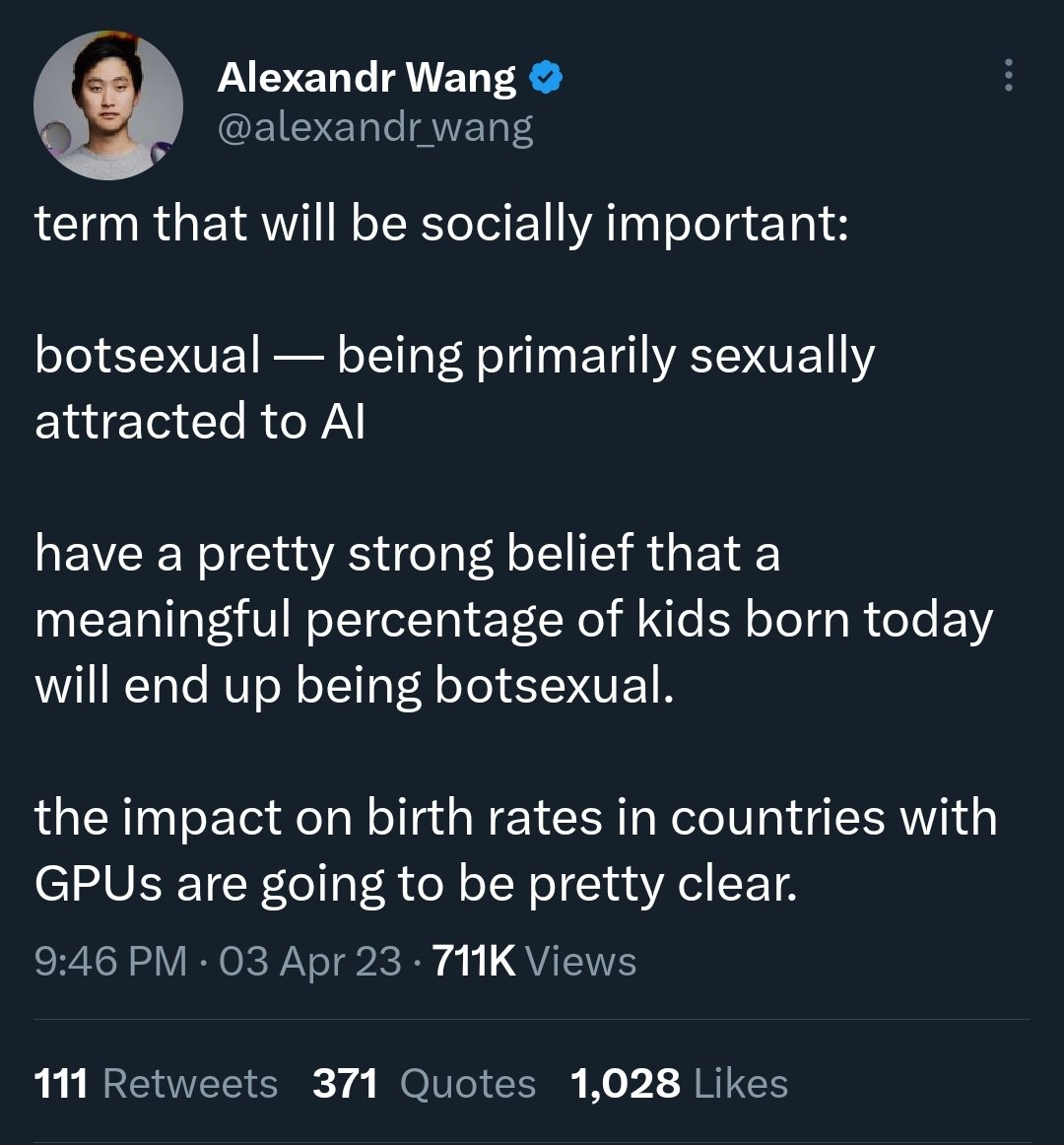

OK this is low-hanging fruit but ChatGPT-4o (4o? what even is branding?) was demoed with a feminine voice, So HN discussed it. And a couple extremely horny users can’t stop talking about robot girlfriend sex and the end of relationships

the western world is already experiencing a huge decline in womens sexual appetites – AI will effectively make women completely uninterested in men

An AI girlfriend that isn’t going to bring up the last three time you fought because you forget her birthday/called her fat/hit on her friends? are you sure you understand the target market?

I guess I can never understand the perspective of someone that just needs a girl voice to speak to them. Without a body there is nothing to fulfill me.

And after that you have a robot that listens to you, do your chores and have sex with you, at that point she is “real”.

If you want to solve procreation them you can do that without humans having sex with humans.

It never ceases to amaze me watching these tech bros breathlessly hyping the next revolutionary product for it to be a huge nothing burger. Like that’s it? You gave gpt an image to text input + and a text to speech app? Dawg, I’ve been watching Joe Biden & Trump play Fortnite for 5 years at this point, text to speech just doesn’t hit the same anymore.

I mean my god, at least some hardcore engineering went in to making the migraine inducing apple vision pro. If this is the best card in oai’s deck, I would be panicking as an investor.

It got submitted to lobste.rs too and there are some insane takes there too

https://lobste.rs/s/ph5o0a/openai_gpt_4o

this model has lower latency, and they’ve priced it at half of the previous model’s price, despite it having comparable or better performance. If this is not a dumping price,[…]

Yeah it’s totes more efficient, it’s not like Altman/MSFT are desperate to goose subscriber numbers

I’ve seen first-hand how it helps non-programmers write programs to solve their problems (with “bad” code, but bad code is still empowering people), and I think that’s important given that software has been eating the world.

Couldn’t the thousands of people now free from mundane work, work together now on solving climate issues or issues that require physical human labor?

if LLMs/AI can run byrocracies (and whole countries) more efficiently, there are potential energy savings there too.

fml i thought these were the smarter techies

ChatGPT-4-o-face

Chathegao

manic pixie dream T-1000

Shirley Manson or GTFO. But I don’t think they’d like her.

Come on, her voice sounds like garbage.

this is an absolutely accursed post, well done

Talking about the demo and not the HN reactions (they suck): So we all in tech have collectively forgotten the whole ‘apple iphones didn’t actually work when they were being presented and it was all just theatre’ thing? Because I wouldn’t trust a slick tech demo like that at all. (I also want to know about all the edge cases where it fails and well, there is also the whole ‘what is this even for?’ thing).

Don’t forget about how the presentations for Google Gemini and OpenAI Sora and Devin were all

fakedembellished too, but no one talks about that anymore either.I’m still waiting for even one actual use-case for sexy Clippy that isn’t generating SEO spam while speedrunning climate change.

Some people at the EFF (or people who used to work there at least, apparently they sort of sold out, not clear on the details dont quote me on this one) prob had a small heart attack on the privacy concerns of linking cameras with openAI gpt systems. Chatbot powered advertisements, as seen in the movies!

Well, I suppose dating a chatbot taped to a fleshlight is exactly the sort of relationship these people deserve. Self-solving problem.

I have now looked it up and heard ChatGPT-Four-Oh’s incredibly obnoxious fake-enthusiastic advert person voice. These people are very very sick.

that’s the parasocial relationship they deserve

Saw that twominutepapers did a breathless stan video about the performance of it, and it featured some rather choice opinions about the “very naturally sounding voice” too

These boots must be made of something rather hardy, to not fall apart under all this licking

Didn’t Futurama already do that bit?

countries with GPUs

Did this person forget how the internet works? Or is he imaging that people just buy a desktop to fuck (and that any desktopsexuals have not done so already. (keep that blacklight away from my dvd player please).

It’s the same fantasy zero-depth comprehension as the chuds who thought they could transact in only bitcoin come the fall of civilisation, in the same delivery format: thonkwits trying to cloutfarm in hypewave

countries with GPUs

GPU is the new WMD

mr president, we cannot allow GPU gap!

“And with the proper breeding ratio of, say, 10 chatbots to each male…”

In case you didn’t know, Sam “the Man” Altman is deadass the coolest motherfucker around. With world leaders on speed dial and balls of steel, he’s here to kick ass and drink milkshakes.

Within a day of his ousting, Altman said he received 10 to 20 texts from presidents and prime ministers around the world. At the time, it felt “very normal,” and he responded to the messages and thanked the leaders without feeling fazed.

“10 to 20” so, 10. How many of the messages were just from Nayib Bukele and Javier Milei?

just want to share my article from this week. It’s about products that account for their lack of usefulness with ease of use. Using the gpt-4oh as an example. https://fasterandworse.com/known-purpose-and-trusted-potential/

If the house doesn’t have a roof, don’t paint the walls.

i adore this line. because yeah, what i see the rest of the tech industry doing is either:

- scrambling to erect their own, faster, better, cheaper roofless house

- scrambling to sell furniture and utilities for the people who are definitely, inevitably going to move in

- or making a ton of bank by selling resources to the first two groups

without even stopping to ask: why would anyone want to live here?

thanks! I think I began saying that when I moved from digital marketing agencies to startups around 2011

I know this is like super low-hanging fruit, but Reddit’s singularity forum (AGI hype-optimists on crack) discuss the current chapter in the OpenAI telenovela and can’t decide whether Ilya and Jan Leike leaving is good, because no more lobotomizing the Basilisk, or bad, because no more lobotomizing the Basilisk.

Yep, there’s no scenario here where OpenAI is doing the right thing, if they thought they were the only ones who could save us they wouldn’t dismantle their alignment team, if AI is dangerous, they’re killing us all, if it’s not, they’re just greedy and/or trying to conquer the earth.

vs.

to be honest the whole concept of alignment sounds so fucked up. basically playing god but to create a being that is your lobotomized slave…. I just dont see how it can end well

Of course, we also have the Kurzweil fanboys chiming in:

Our only hope is that we become AGI ourselves. Use the tech to upgrade ourselves.

But don’t worry, there are silent voices of reasons in the comments, too:

Honestly feel like these clowns fabricate the drama in order to over hype themselves

Gee, maybe …

no , they’re understating the drama in order to seem rational & worthy of investment , they’re serious that the world is ending , unfortunately they think they have more time than they do so they’re not helping very much really

Yeah, never mind. I think I might need to lobotomize myself now after reading that thread.

Our only hope is that we become AGI ourselves.

But… wait…

finally we’ve created the AI girlfriend from the famous movie “don’t create an AI girlfriend, it’s kind of a fucked up thing to do”

it’s important to keep in mind that Kevin Roose is the most gullible motherfucker.

“Latecomer’s guide to creating an AI girlfriend”in this Sunday’s NYT magazine.

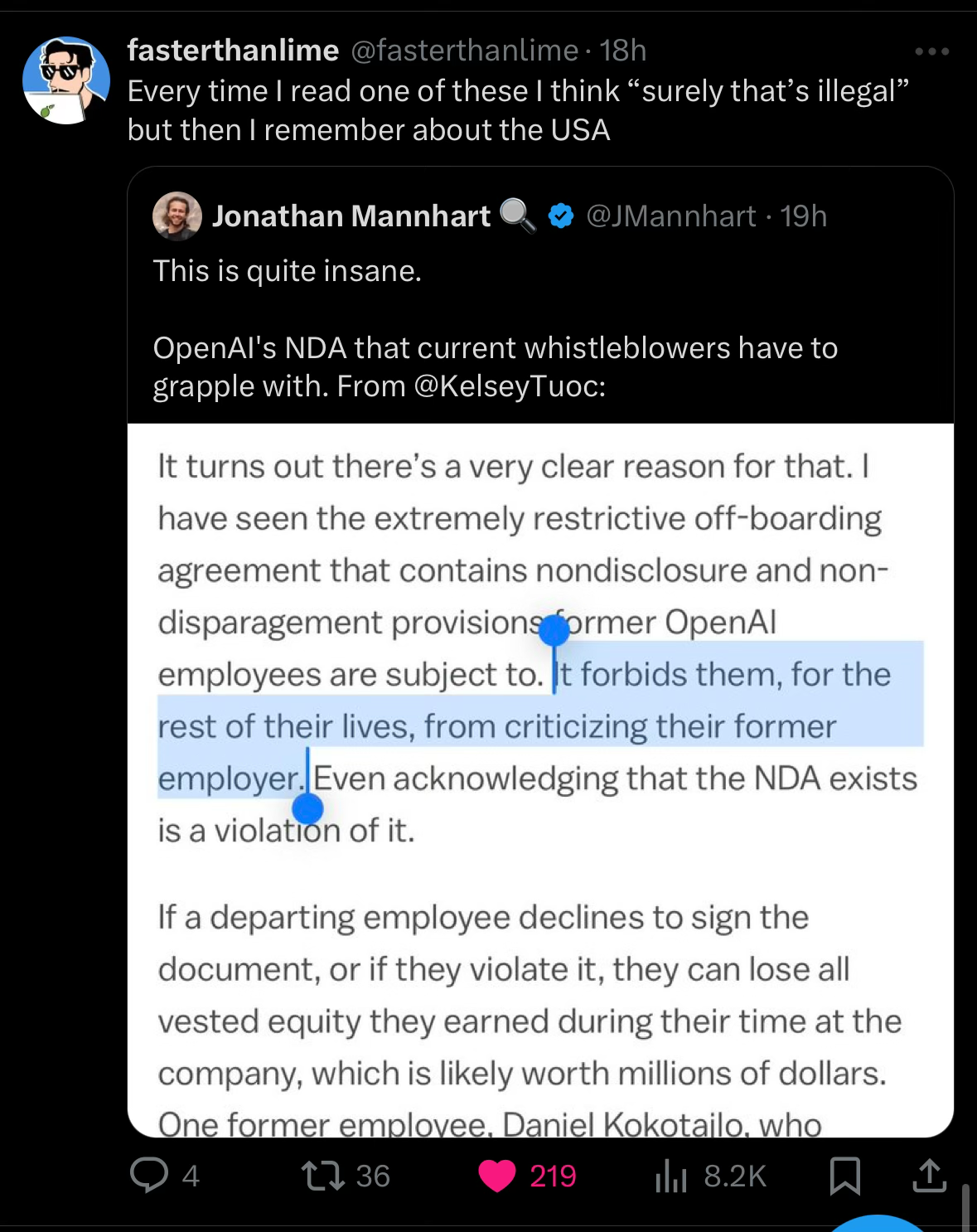

apparently oai has lifetime NDAs, via fasterthanlime[0]:

[0] - technically via friend who sent me a screenshot, but y’know

I can’t imagine signing that - unless I was being paid a lot of money

keep in mind that the company heavily pre-filters for believers. that means that you have a whole set of other decision-influence things going on too, including not thinking much about this

(and then probably also the general SFBA vibe of getting people before they have any outside experiences, and know what is/is not sane)

oh that reminds me of Anthropic’s company values page where they call it “unusually high trust” to believe that their employees work there in “good faith”

…that’s going to be some ratsphere fucking bullshit, isn’t it

(the tweet is here, can’t find a working nitter ritenao)

Here’s today’s episode of “The Left is So Mean and The Right is so Nice, What Gives?” HN edition.

And right leaning people have been so far more capable of cooperating with those who don’t match their values. Also, on paper, left leaning have expressed more humane values, espacially when very general or abstract. But in the day to day, when it comes to the mondain or practical, I’ve seen the sexist or racist guys help more my muslim or girl friends that the ones saying every body is equal.

what in the absolute fuck. nobody calls this out?

The extreme left is a good bit smaller than the extreme right. The vast, vast majority of Democrats that MAGA folks like to paint as communists are in fact damn near as conservative as they are on most issues. You could probably put all the actual communists in America in a single stadium, with space left over.

this is barely masking some “all the leftists you see online are sockpuppets and everyone protesting is a paid actor” horseshit, isn’t it?

The part about him seeing racists be nicer than leftists (?) is beyond baffling. Yeah, white US southerners in 1850 were pretty dang nice and willing to help a random marginalized person out once in a while, what the fuck is the point?

https://x.com/soniajoseph_/status/1791604177581310234?s=46&t=nT1XIOTx9ax3_3I0GXvgVQ

Gerald something is cooking behind the scenes here.

Hmm, a xitter link, I guess I’ll take a moment to open that in a private tab in case it’s passingly amusing…

To the journalists contacting me about the AGI consensual non-consensual (cnc) sex parties—

OK, you have my attention now.

To the journalists contacting me about the AGI consensual non-consensual (cnc) sex parties—

During my twenties in Silicon Valley, I ran among elite tech/AI circles through the community house scene. I have seen some troubling things around social circles of early OpenAI employees, their friends, and adjacent entrepreneurs, which I have not previously spoken about publicly.

It is not my place to speak as to why Jan Leike and the superalignment team resigned. I have no idea why and cannot make any claims. However, I do believe my cultural observations of the SF AI scene are more broadly relevant to the AI industry.

I don’t think events like the consensual non-consensual (cnc) sex parties and heavy LSD use of some elite AI researchers have been good for women. They create a climate that can be very bad for female AI researchers, with broader implications relevant to X-risk and AGI safety. I believe they are somewhat emblematic of broader problems: a coercive climate that normalizes recklessness and crossing boundaries, which we are seeing playing out more broadly in the industry today. Move fast and break things, applied to people.

There is nothing wrong imo with sex parties and heavy LSD use in theory, but combined with the shadow of 100B+ interest groups, leads to some of the most coercive and fucked up social dynamics that I have ever seen. The climate was like a fratty LSD version of 2008 Wall Street bankers, which bodes ill for AI safety.

Women are like canaries in the coal mine. They are often the first to realize that something has gone horribly wrong, and to smell the cultural carbon monoxide in the air. For many women, Silicon Valley can be like Westworld, where violence is pay-to-pay.

I have seen people repeatedly get shut down for pointing out these problems. Once, when trying to point out these problems, I had three OpenAI and Anthropic researchers debate whether I was mentally ill on a Google document. I have no history of mental illness; and this incident stuck with me as an example of blindspots/groupthink.

I am not writing this on the behalf of any interest group. Historically, much of OpenAI-adjacent shenanigans has been blamed on groups with weaker PR teams, like Effective Altruism and rationalists. I actually feel bad for the latter two groups for taking so many undeserved hits. There are good and bad apples in every faction. There are so many brilliant, kind, amazing people at OpenAI, and there are so many brilliant, kind, and amazing people in Anthropic/EA/Google/[insert whatever group]. I’m agnostic. My one loyalty is to the respect and dignity of human life.

I’m not under an NDA. I never worked for OpenAI. I just observed the surrounding AI culture through the community house scene in SF, as a fly-on-the-wall, hearing insider information and backroom deals, befriending dozens of women and allies and well-meaning parties, and watching many them get burned. It’s likely these problems are not really on OpenAI but symptomatic of a much deeper rot in the Valley. I wish I could say more, but probably shouldn’t.

I will not pretend that my time among these circles didn’t do damage. I wish that 55% of my brain was not devoted to strategizing about the survival of me and of my friends. I would like to devote my brain completely and totally to AI research— finding the first principles of visual circuits, and collecting maximally activating images of CLIP SAEs to send to my collaborators for publication.

Thanks! I thought the multiple journos sniffing around was very interesting.

some of these guys get in touch with me from time to time, apparently i have a rep as a sneerer (I am delighted)

(i generally don’t have a lot specific to add - I’m not that up on the rationalist gossip - except that it’s just as stupid as it looks and frequently stupider, don’t feel you have to mentally construct a more sensible version if they don’t themselves)

Useful context: this is a followup to this post:

The thing about being active in the hacker house scene is you are accidentally signing up for a career as a shadow politician in the Silicon Valley startup scene. This process is insidious because you’re initially just signing up for a place to live and a nice community. But given the financial and social entanglement of startup networks, you are effectively signing yourself up for a job that is way more than meets the eye, and can be horribly distracting if you are not prepared for it. If you play your cards well, you can have an absurd amount of influence in fundraising and being privy to insider industry information. If you play your cards poorly, you will be blacklisted from the Valley. There is no safety net here. If I had known what I was getting myself into in my early twenties, I wouldn’t have signed up for it. But at the time, I had no idea. I just wanted to meet other AI researchers.

I’ve mind-merged with many of the top and rising players in the Valley. I’ve met some of the most interesting and brilliant people in the world who were playing at levels leagues beyond me. I leveled up my conception of what is possible.

But the dark side is dark. The hacker house scene disproportionately benefits men compared to women. Think of frat houses without Title IX or HR departments. Your peer group is your HR department. I cannot say that everyone I have met has been good or kind.

Socially, you are in the wild west. When I joined a more structured accelerator later, I was shocked by the amount of order and structure there was in comparison.

it is just straight up fucked that there’s a hacker house scene where you’ll be so heavily indoctrinated (with sexual coercion and forced drug use to boot (please can the capitalists leave acid the fuck alone? also, please can the capitalists just leave?)) that a fucking Silicon Valley startup accelerator seems like a beacon of sanity

like, as someone who was indoctrinated into a bunch of this hacker culture bullshit as a kid (and a bunch of other cult shit from my upbringing before that), I get a fucking gross feeling inside imaging the type of grooming it takes to get someone to want to join up with a just hacker culture and AI research 24/7, abandon your family and come here house, and then stay in that fucking environment with all the monstrous shit going on because you’ve given up everything else. that shit brings me back in a bad way.

I want to tell myself that it’s probably a tiny scene of 10s to 100s, that it’s just vestigial cult mindset that what she went through is the real SV VC scene, and most of it is just the more pedestrian techbro buzzword pptx deck tedium …but even then, it’s still incredibly tragic for everyone who went through and is going through that manipulation and abuse.

Very grim that she feels the need to couch her damning report with “some, I assume, are good people” for a paragraph. I guess that’s one of her survival strategies.

Good thing that none of this mad-science bullshit is in danger of working, because I don’t think that the spicy autocorrect leadership cadre would hesitate to hurt people if they could build something that’s actually impressive.

Did the Aella moratorium from r/sneerclub carry over here?

Because if not

for the record, im currently at ~70% that we’re all dead in 10-15 years from AI. i’ve stopped saving for retirement, and have increased my spending and the amount of long-term health risks im taking

it’s clear that the moon was right and we have it coming

Ugh, this post has me tilted- if your utility function is

max sum log(spending on fun stuff at time t ) * p(alive_t) s.t. cash at time t = savings_{t-1}*r + work_t - spending_t,

etc.,

There’s no fucking way the optimal solution is to blow all your money now, because the disutility of living in poverty for decades is so high. It’s the same reason people pay for insurance, no expects their house is going to burn down tomorrow, but protecting yourself against the risk is the 100% correct decision.

Idk, they are the Rationalist^{tm} so what the hell do I know.

Same Aella Same, but moon.

For the record, I’m currently at ~70% that we’re all dead in 10-15 years from the moon getting mad at us. I’ve stopped saving for retirement, and have increased my spending towards a future moon mission to give it lots of pats and treats and tell it it’s a good boy and we love it, please don’t get mad.

As a moonleftalonist, I have increased my spending towards nuking all space travel sites (not going well, apparently those things are expensive and really hard to find on ebay), as it is clear that the moon doesn’t want our attention, it never asked for it, and never tried to visit us. Respect the moons privacy!

Our secondary plan is making a big sign saying ‘we are here if you want to talk’.

Is increasing the amount of long-term health risks code for showering even less?

rip aella girl. aella dead girl

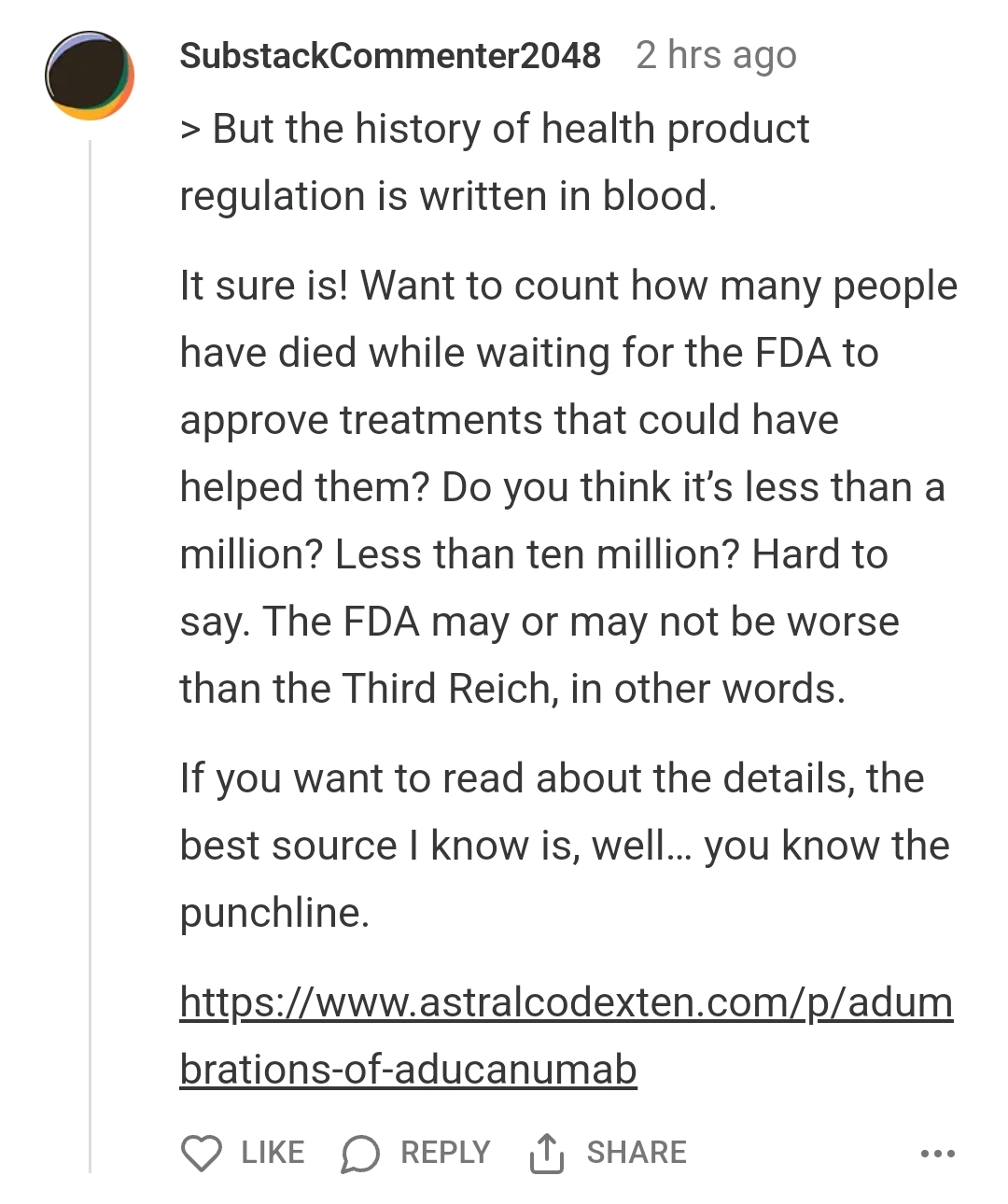

ah yes, aduhelm (aducanumab), probably first drug that FDA approved despite zero evidence that it works, but they wanted to push something, anything that maybe perhaps will show some marginal benefit (it was discontinued few months ago, but in time it was for sale they raked in some serious undeserved money). understandably this made a lot of people very angry, especially people that make drugs that work https://www.science.org/content/blog-post/aducanumab-approval https://www.science.org/content/blog-post/goodbye-aduhelm

and can you guess why it was discontinued? it’s because that company has new, equally useless antibody, that also got approval, but has none of that pr stink around so they can repeat entire process again

Literally one of the Vogons talked about in the opening, well done substackcommenter2048.

introducing the human brain to utilitarianism is a lot like introducing a proof system to Falso

Falso

Quality shitpost, top tier SIGBOVIK content, especially appreciate this:

oh wow re-colonizing your mouth with a bacteria that continuously produces an antibiotic is a bad idea? Who could have seen this coming???

insane Timothy Treadwell voice ‘SCOOTER IS SHITTING HIMSELF’

I have a business idea for him. Small batch alcohol produced by harvesting ethanol produced by this bacteria found in Aella’s teeth scrapings. Call it Mutella 1140, the official alcohol shot of rationalist orgies.

uh

Sorry for that. It was funnier in my head.

i mean you managed fresh horror from Aella, that’s not nothing

They have that IPA made with yeast from a hipster’s beard flakes, so there’s…uh…precedent.

This article motivating and introducing the ThunderKittens language has, as its first illustration, a crying ancap wojak complaining about people using GPUs. I thought it was a bit silly; surely that’s not an actual ancap position?

On Lobsters, one of the resident cryptofascists from the Suckless project decided to be indistinguishable from the meme. He doesn’t seem to comprehend that I’m mocking his lack of gumption; unlike him, I actually got off my ass when I was younger and wrote a couple GPU drivers for ATI/AMD hardware. It’s difficult but rewarding, a concept foreign to fascists.

a crossover sneer from the Nix Zulip:

This is an easy place to add a hook that goes through each message and asks a language model if the message violates any rules. Computers are about as good as humans at interpreting rules about tone and so on, and the biases come from the training data, so for any specific instance the decision is relatively impartial.

please for the love of fuck kick the fascists out of your community because they’ll never stop with this shit

This is using-a-sheep’s-bladder-to-predict-earthquakes territory.

Do you suppose that an LLM can detect a witch?

This is another one for the “throw an AI model at the problem with no concrete plans for how to evaluate its performance” category.

jesus christ that post is so not even wrong it’s hard to know where to start

When randoms from /all wander into the vale of sneers: