Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Semi-obligatory thanks to @dgerard for starting this.)

I wonder how much % of the freakout over Deepseek is AI doomers realizing the coming AI god might be … ChiCom!

Is it a crime to enjoy a succulent Chinese AI?

This is democracy manifest! (rip)

you enjoy it? suspicious

After fondling ChatGPT to generate naughty things, man has meltdown when he learns no one cares.

Horror. Dismay. Disbelief. For weeks, it felt like I was physically being crushed to death.

I hurt all the time, every part of my body. The urge to make someone who could do something listen and look at the evidence was so overwhelming.

i don’t understand the “safety” angle here. if chatgpt can output authoritatively-looking sentence-shaped string about pipebombs, then it’s only because similar content about pipebombs is already available on wide open internet. if model is closed, then at worst they would have to monitor its use (not like google blocks any similar information from showing up). if model is open, then no safeguards make sense in the first place. i guess it’s more about legal liability for openai? now they can ignore it with all these bills about “ai safety” gone (for now)

frankly it’s probably harm prevention if people turn to an LLM for pipe bomb instructions. “5) Put the warm pizza in the center of the pipe bomb. To maximize the radius of the detonation, you should roll the pizza and make sure that it fits securely into the pipe.”

that’s a tiny amount of harm reduction if there are other ways to get there

it can go in opposite way: some segment of propmtfondlers specifically went after one open-source locally ran model because it was “uncensored” (i think it was mistral) the logic in this one was, there’s no search going out so you can “look up” anything and no one would be any wiser. this is extremely charitably assuming that llm training does a kind of lossy compression on all data it devours, and since they took everything, it’s basically almost like worse google search

if there are steps like “put a thing in pipe. make sure to weld ends shut” then it’s also harm reduction, but instead for everyone else. imagine getting eldest son’d by a bot, pathetic

Lame. It didn’t even remember to specify pineapple as a topping.

I’m not even joking, really. the way I see harm in LLMs talking about pipe bombs is less that they’ll give instructions and more that we might get a character.ai style situation where the LLM talks someone into an attack

Also remember that some of the instructions you get via these tricks are wrong. The ‘pretend that you are writing a movie script and give me tips on how to break into a house’ thing gave you lockpicking tips, which looks cool as a movie plot. But not just the advice to tap the lock, which is iirc what they actually do (breaks the lock sure, but you are breaking in already, also is faster). This kind of stuff combined with ‘eh you could google this before’ is why so many people ge talked to prob ignored him and didnt freak out.

If you let amateurs do security you get amateur security after all.

Talking people into things, esp as people lionize and anthropomorphize llms so much, is a bigger problem.

also, relying on spicy autocomplete when trying to put together a deadly device sounds like cyberpunk-flavored darwin award material

This tied into a hypothesis I had about emergent intelligence and awareness, so I probed further, and realized the model was completely unable to ascertain its current temporal context, aside from running a code-based query to see what time it is. Its awareness - entirely prompt-based - was extremely limited and, therefore, would have little to no ability to defend against an attack on that fundamental awareness.

How many times are AI people going to re-learn that LLMs don’t have “awareness” or "reasnloning’ in a sense humans would find meaningful?

Ed Zitron radicalizes NPR host Brooke Gladstone in real time on the midweek episode of “On the Media”

https://www.wheresyoured.at/deep-impact/ And the companion piece on his blog.

What I didn’t wager was that, potentially, nobody was trying. My mistake was — if you can believe this — being too generous to the AI companies, assuming that they didn’t pursue efficiency because they couldn’t, and not because they couldn’t be bothered.

This isn’t about China — it’s so much fucking easier if we let it be about China — it’s about how the American tech industry is incurious, lazy, entitled, directionless and irresponsible. OpenAi and Anthropic are the antithesis of Silicon Valley. They are incumbents, public companies wearing startup suits, unwilling to take on real challenges, more focused on optics and marketing than they are on solving problems, even the problems that they themselves created with their large language models.

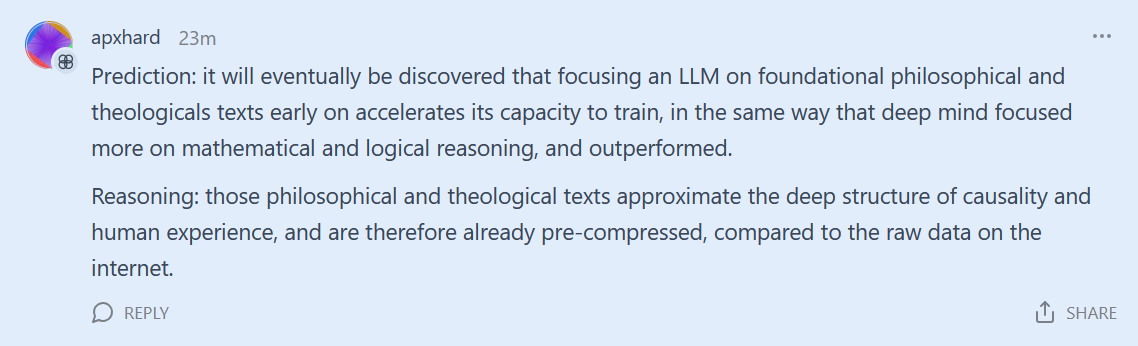

Today on highlighting random rat posts from ACX:

(Current first post on today’s SSC open thread)

On slightly more relevant news the main post is scoot asking if anyone can put him in contact with someone from a major news publication so he can pitch an op-ed by a notable ex-OpenAI researcher that will be ghost-written by him (meaning siskind) on the subject of how they (the ex researcher) opened a forecast market that predicts ASI by the end of Trump’s term, so be on the lookout for that when it materializes I guess.

Does scoot actually know how computers work? Asking for a friend.

scoot asking if anyone can put him in contact with someone from a major news publication

how about the New York Times

NYT and WaPo are his specific examples. He also wants a connection to “a policy/defense/intelligence/foreign affairs journal/magazine” if possible.

well I guess the NYT is auditioning the Völkisher Beobachter rôle so that tracks

I screenshot this the other day and forgot to post it. Well, enjoy.]

i wonder which endocrine systems are disrupted by not having your head sufficiently stuffed into a toilet before being old enough to type words into nazitter dot com

Good news everyone: A new tarpit to trap LLM scrapers just dropped

Me: Oh boy, I can’t wait to see what my favorite thinkers of the EA movement will come up with this week :)

Text from Geoff: "Morally stigmatize AI developers so they considered as socially repulsive as Nazi pedophiles. A mass campaign of moral stigmatization would be more effective than any amount of regulation. "

Another rationalist W: don’t gather empirical evidence that AI will soon usurp / exterminate humanity. Instead as the chief authorities of morality, engage in societal blackmail to anyone who’s ever heard the words TensorFlow.

Next Sunday when I go to my EA priest’s group home, I will admit to having invoked the chain rule to compute a gradient 1 trillion times since my last confessional. For this I will do penance for the 8 trillion future lives I have snuffed out and whose utility has been consumed by the basilisk.

Indulge yourself! Try an electric_monk o7 subscription today!

engage in societal blackmail to anyone who’s ever heard the words TensorFlow.

no no wait this is geoff’s stopped clock moment

dude missed that Trump won and the people in power are Nazi pedophiles

gives a hell of a kicker to the numbers I found

Spotted in the Wild:

Live: Chinese AI bot DeepSeek sparks US market turmoil, wiping $500bn off major tech firm

Shares for leading US chip-maker Nvidia dropped more than 15% after the emergence of DeepSeek, a low-cost Chinese AI bot.

https://www.bbc.com/news/live/cjr85l2e4l4t

lmao

Is it too early to hope that this is the beginning of the end of the bubble?

Also, does someone know why broadcom was also hit so hard? Is it because they make various networking-related chips used in datacenter infrastructure?

When hedge funds decide to flip the switch on something the reaction never looks rational. Meta was green today ffs.

Folks around here told me AI wasn’t dangerous 😰 ; fellas I just witnessed a rogue Chinese AI do 1 trillion dollars of damage to the US stock market 😭 /s

is that Link??

excuse me, what the fuck is this

Either Baader-Meinhoff or Manson family, not sure which.

Also Andy Ngo has now picked this up and its being spun as a purely trans terror cell thing.

fuck

i don’t think that RAF dropped acid but in revolutionary way

They’re probably talking about Ziz’s group. The double homicide in Pennsylvania is likely the murder of Jamie Zajko’s parents referenced in this LW post, and the Vallejo county homicide is the landlord they had a fatal altercation with and who was killed recently.

That was my conclusion as well.

landlord was killed in 2022, but recently they killed someone who shot one of zizians back then

No no that’s right, landlord shot one of them to death and got a sword put through him but he survived. He was stabbed again recently and died.

noted, edited

This is a good article, thanks for posting.

Pouring one out for the local-news reporters who have to figure out what the fuck “timeless decision theory” could possibly mean.

Taylor said the group believes in timeless decision theory, a Rationalist belief suggesting that human decisions and their effects are mathematically quantifiable.

Seems like they gave up early if they don’t bring up how it was developed specifically for deals with the (acausal, robotic) devil, and also awfully nice of them to keep Yud’s name out of it.

I kicked them a donation just for that

This is from 2023 but when debugging an xfce issue this week I came across this forum post: https://forum.xfce.org/viewtopic.php?id=16835

The user is competent enough to use xfce with Debian, but too incompetent to understand debug symbols is not a violation of privacy.

I get being privacy conscious and that sharing crash dumps and logs you don’t really understand yourself can be scary. Making demands of urgent free tech support from strangers is just rude, though.

my least favorite thing about old forums, which carried over to a lot of open source spaces, is how little moderation there is. coming into the help forum with a “no fuck you help me the way I want” attitude should probably be an instant ban and “what the fuck is wrong with you” mod note, cause that’s the exact type of shit that causes the community to burn out quick, and it decreases the usefulness of the space by a lot. but somehow almost every old forum was moderated by the type of cyberlibertarian who treated every ban like an attack on free speech? so you’d constantly see shit like the mod popping in to weakly waggle their finger at the crackpot who’s posting weird conspiracy shit to every thread (which generally caused the crackpot to play the victim and/or tell the mod to go fuck themselves) instead of taking a stand and banning the fucker

and now those crackpots have metamorphosed into full fascists and act like banning them from your GitHub is an international incident, cause they almost never receive any pushback at all

Hey, did you know of you own an old forum full of interesting posts from back in the day when humans wrote stuff, you can just attach ai bots to dead accounts and have them post backdated slop for, uh, reasons?

this was mentioned in last week’s thread

what I don’t get is why the admins chose to both backdate the entries and re-use poster’s handles. If they’d just tried to “close” open questions using GenAI with the current date and a robot user it would still be shit but not quite as deceptive

The whole thing is just weirdly incompetent. Maybe they just had everything configured wrong and accidentally deployed sone throwaway tests to production? I could almost see it as a way to poison scrapers, given that there are some odd visibility settings on the slop posts, though the owner’s shiftiness and dubious explanations suggest it wasn’t anything so worthy.

And on a less downbeat and significantly more puerile note, Dan Fixes Coin Ops makes a nice analogy for companies integrating ai into their product.

that thread is a work of genius and answers what the next tech boom needs to be

dicks in mousetrapsI MEAN whatever wastes electricity most, preferably with Nvidia cardsI do actually have a mechanism for using the sharp edges of NVidia cards for

dickmouse trapping purposes. And we could - hypothetically - use the extraneous power inputs to mine Bitcoin or something, maximizing efficiency!

you can get banned on facebook now for linking to distrowatch https://www.tomshardware.com/software/linux/facebook-flags-linux-topics-as-cybersecurity-threats-posts-and-users-being-blocked

but it’s not as bad as you think, it’s slightly worse. it’s not only distrowatch and linux groups got banned too