Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this.)

New piece from Brian Merchant: DOGE’s ‘AI-first’ strategist is now the head of technology at the Department of Labor, which is about…well, exactly what it says on the tin. Gonna pull out a random paragraph which caught my eye, and spin a sidenote from it:

“I think in the name of automating data, what will actually end up happening is that you cut out the enforcement piece,” Blanc tells me. “That’s much easier to do in the process of moving to an AI-based system than it would be just to unilaterally declare these standards to be moot. Since the AI and algorithms are opaque, it gives huge leeway for bad actors to impose policy changes under the guide of supposedly neutral technological improvements.”

How well Musk and co. can impose those policy changes is gonna depend on how well they can paint them as “improving efficiency” or “politically neutral” or some random claptrap like that. Between Musk’s own crippling incompetence, AI’s utterly rancid public image, and a variety of factors I likely haven’t factored in, imposing them will likely prove harder than they thought.

(I’d also like to recommend James Allen-Robertson’s “Devs and the Culture of Tech” which goes deep into the philosophical and ideological factors behind this current technofash-stavaganza.)

Can’t wait for them to discover that the DoL was created to protect them from labor

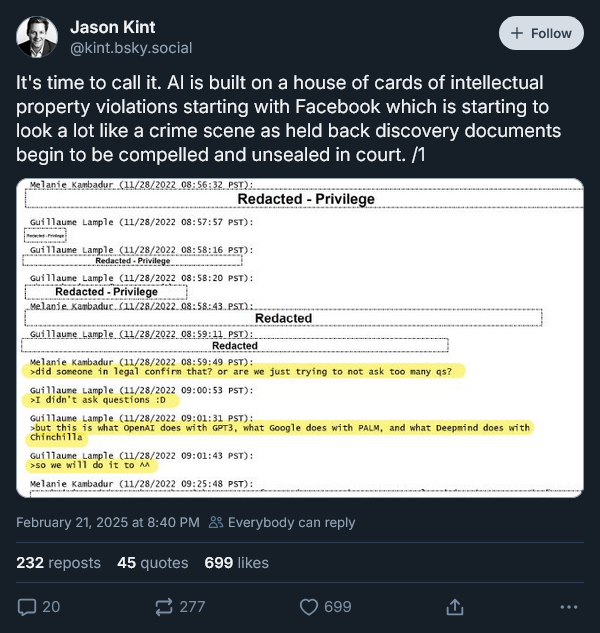

Court documents regarding Facebook’s plagiarism lawsuit just started getting unsealed, and ho-lee shit is this a treasure trove:

This confirms basically everything I said a week ago - AI violates copyright by design, and a single copyright suit going through means its open fucking season on the AI industry. Wonder who’s gonna blink first.

Our local pro entertainment industry lobby group (the kind of group who gets thepiratebay blocked, Brein) is already succesfully going after smaller LLMs and datasets created by (and made freely available) over enthousiastic amateurs/hobbyist which breach copyright.

This might seem like a positive thing, but I doubt they will have the willpower/power/desire to go after the big ones. (And even then not sure they are the good guys here).

oh would you look at that, something some people made proved helpful and good, and now cloudflare is immediately taking the idea to deploy en masse with no attribution

double whammy: every one of the people highlighted is a dude

“it’s an original idea! we’re totes doing the novel thing of model synthesis to defeat them! so new!” I’m sure someone will bleat, but I want them to walk into a dark cave and shout at the wall forever

(anubis isn’t strictly the same in that set of things, but I link it both because completeness and subject relevance)

https://github.com/TecharoHQ/anubis/issues/50 and of course we already have chatgptfriends on the case of stopping the mean programmer from doing something the Machine doesn’t like. This person doesn’t even seem to understand what anubis does, but they certainly seem confident chatgpt can tell him.

oh cute, the clown cites[0] POPIA in their wallspaghetti, how quaint

(POPIA’s an advancement, on paper. In practice it’s still……not working well. source: me, who has tried to make use of it on multiple occasions. won’t get into details tho)

[0] fsvo

New thread from Baldur Bjarnason:

Keep hearing reports of guys trusting ChatGPT’s output over experts or even actual documentation. Honestly feels like the AI Bubble’s hold over society has strengthened considerably over the past three months

This also highlights my annoyance with everybody who’s claiming that this tech will be great if every uses it responsibly. Nobody’s using it responsibly. Even the people who think they are, already trust the tech much more than it warrants

Also constantly annoyed by analysis that assumes the tech works as promised or will work as promised. The fact that it is unreliable and nondeterministic needs to be factored into any analysis you do. But people don’t do that because the resulting conclusion is GRIM as hell

LLMs add volatility and unpredictability to every system they touch, which makes those systems impossible to manage. An economy with pervasive LLM automation is an economy in constant chaos

On a semi-related note, I expect the people who are currently making heavy use of AI will find themselves completely helpless without it if/when the bubble finally bursts, and will probably struggle to find sympathy from others thanks to AI indelibly staining their public image.

(The latter part is assuming heavy AI users weren’t general shitheels before - if they were, AI’s stain on their image likely won’t affect things either way. Of course, “AI bro” is synonymous with “trashfire human being”, so I’m probably being too kind to them :P)

In other news, IETF 127 (which is being held in November) is facing a boycott months in advance. The reason? Its being held in the United States.

This likely applies to a lot of things, but that would have been unthinkable before the election.

Think Germany and the Uk created travel advisories against the US. ( As we the Dutch are mostly neutral cowards, 20% putins lackey, almost an American vassal state, and very good at ignoring the rest of the world, doubt we will anytime soon).

So far France and Netherlands have already set up programs to poach american scientists fired during recent ripping copper from the walls, so i wouldn’t say there’s nothing done

A thing which came under criticism here, as while this program is being set up they are also doing budget cuts on universities. So don’t expect much from .nl here. Also our gov is a mess, more interested at putting up border controls (this year they caught 250 people, which they consider a big success for re-instituting border controls). So yeah doubt, esp with Wilders in gov and opposition at the same time.

As a Canadian we’re just waiting for the tanks to start running through.

We’re apparently going to get an election April 28th, but is there still going to be a Canada by then? Who knows.

A lesswrong declares,

social scientists are typically just stupider than physical scientists (economists excepted).

As a physicist, I would prefer not receiving praise of this sort.

The post to which that is a comment also says a lot of silly things, but the comment is particularly great.

Imagine a perfectly spherical scientist…

or uniform duncity?

And high pomposity

That list (which isn’t properly sourced) seems to combine both high academic fields with non academic fields so I have no idea what this list is trying to prove even. (Also, see the fakeness of IQ and there is pressure for ‘smart’ people to go into stem etc etc). I wouldn’t base my argument on a quick google search which gives you information from a tabloid site. Wonder why he didn’t link to his source directly? More from this author: “We met the smartest Hooters girl in the world who has a maths degree and wants to become a pilot” (The guy is now a researcher at ‘Hope not Hate’ (not saying that to mock the guy or the organization, just found it funny, do hope he feels a bit of ‘oh, I should have made different decisions a while back, wish I could delete that’))

The ignorance about social science on display in that article is wild. He seems to think academia is pretty much a big think tank, which I suppose is in line with the extent of the rationalists’ intellectual curiosity.

On the IQ tier list, I like the guy responding to the comment mentioning “the stats that you are citing here”. Bro.

lmao, economists probably did deserve to catch this stray

Are economists considered physical scientists? I’ve read it as “social scientists are dumb except for economists”. Which fits my prejudice for econo-brained less wrongers.

Yeah prob important to note that one of the lw precursor blogs was from an economist, so that is why they consider them one of the good fields. Important to not call out your own tribe.

No, it’s just praise from lesswrong counts as a slight.

Yeah, the exclusion of the dismal science got a chuckle out of me.

ya know the Nicole the Fediverse Chick spam? this poster thinks it’s a revenge Joe job:

Nice detective work. The second time I got one of those I figured it resembles the false flag channel ad spammers on IRC. I still wonder occasionally what #superbowl at supermets did for someone to go on a multi-year spamming campaign against them.

Nicole was a bot? I need to make some phone calls… (jk)

Don’t make me tap the sign!

Don’t Date Robots!

Kill them instead

Don’t anthropomorphize, they are not alive, destroy them.

Razer claims that its AI can identify 20 to 25 percent more bugs compared to manual testing, and this can reduce QA time by up to 50 percent as well as cost savings of up to 40 percent

as usual this is probably going to be only the simplest shit, and I don’t even want to think of the secondary downstream impacts from just listening to this shit without thought will be

Marginally related, but I was just served a YouTube ad for chewing gum (yes, I’m too lazy to setup ad block).

“Respawn, by Razer. They didn’t have gaming gum at Pompeii, just saying.”

I think I felt part of my frontal lobe die to that incomprehensible sales pitch, so you all must be exposed to it as well.

Isn’t this what got crowdstrike in trouble?

not quite the same but I can see potential for a similar clusterfuck from this

also doesn’t really help how many goddamn games are running with rootkits, either

If I had to judge Razer’s software quality based on what little I know about them, I’d probably raise my eyebrows because they ship some insane 600+ MiB driver with a significant memory impact with their mice and keyboards that’s needed to use basic features like DPI buttons and LED settings, when the alternative to that is a 900 kiB open source driver which provides essentially the same functionality.

And now their answer to optimization is to staple a chatbot onto their software? I think I pass.

Well the use of stuff like fuzzers has been a staple for a long time so ‘compared to manual testing’ is doing some work here.

The secret is to have cultivated a codebase so utterly shit that even LLMs can make it better by just randomly making stuff up

At least they don’t get psychic damage from looking at the code

Ran across a new piece on Futurism: Before Google Was Blamed for the Suicide of a Teen Chatbot User, Its Researchers Published a Paper Warning of Those Exact Dangers

I’ve updated my post on the Character.ai lawsuit to include this - personally, I expect this is gonna strongly help anyone suing character.ai or similar chatbot services.

Roundup of the current bot scourge hammering open source projects

https://thelibre.news/foss-infrastructure-is-under-attack-by-ai-companies/

We can add that to the list of things threatening to bring FOSS as a whole crashing down.

Plus the culture being utterly rancid, the large-scale AI plagiarism, the declining industry surplus FOSS has taken for granted, having Richard Stallman taint the whole movement by association, the likely-tanking popularity of FOSS licenses, AI being a general cancer on open-source and probably a bunch of other things I’ve failed to recognise or make note of.

FOSS culture being a dumpster fire is probably the biggest long-term issue - fixing that requires enough people within the FOSS community to recognise they’re in a dumpster fire, and care about developing the distinctly non-technical skills necessary to un-fuck the dumpster fire.

AI’s gonna be the more immediately pressing issue, of course - its damaging the commons by merely existing.

The problem with FOSS for me is the other side of the FOSS surplus: namely corporate encircling of the commons. The free software movement never had a political analysis of the power imbalance between capital owners and workers. This results in the “Freedom 0” dogma, which makes everything workers produce with a genuine communitarian, laudably pro-social sentiment, to be easily coopted and appropriated into the interests of capital owners (for example with embrace-and-extend, network effects, product bundling, or creative backstabbing of the kind Google did to Linux with the Android app store). LLM scrapers are just the latest iteration of this.

A few years back various groups tried to tackle this problem with a shift to “ethical licensing”, such as the non-violent license, the anti-capitalist software license, or the do no harm license. While license-based approaches won’t stop capitalists from using the commons to target immigrants (NixOS), enable genocide (Meta) or bomb children (Google), this was in my view worthwhile as a rallying cry of sorts; drawing a line in the sand between capital owners and the public. So if you put your free time on a software project meant for everyone and some billionaire starts coopting it, you can at least make it clear it’s non-consensual, even if you can’t out-lawyer capital owners. But these ethical licenses initiatives didn’t seem to make any strides, due to the FOSS culture issue you describe; traditional software repositories didn’t acknowledge or make any infrastructure for them, and ethical licenses would still be generically “non-free” in FOSS spaces.

(Personally, I use FOSS operating systems for 26 years now; I’ve given up on contributing or participating in the “community” a long time ago, burned out by all the bigotry, hostility, and First World-centrism of its forums.)

Update on the Vibe Coder Catastrophetm: he’s killed his current app and seems intent to vibe code again:

Personally, I expect this case won’t be the last “vibe coded” app/website/fuck-knows-what to get hacked to death - security is virtually nonexistent, and the business/techbros who’d be attracted to it are unlikely to learn from their mistakes.

In lesser corruption news, California Governor Gavin Newsom has been caught distributing burner phones to California-based CEOs. These are people that likely already have Newsom’s personal and business numbers, so it’s not hard to imagine that these phones are likely to facilitate extralegal conversations beyond the existing

briberylegitimate business lobbying before the Legislature. With this play, Newsom’s putting a lot of faith into his sexting game.Tbh, weird. If I were a hyper-capitalist ,CA-based CEO, I would take the burner phone as an insult. I’d see it as a lack of faith in the capture of the US. Who needs plausible deniability when you just own the fucking country?

it’s weird and lowkey insulting imo. let’s assume that for some bizarre reason tech ceo needs a burner phone to call governor newsom: do you think i can’t get that myself, old man? i’d assume it’s bugged or worse

or worse

Man, I’m getting tired of these remakes.

Even worse, he got caught handing them out. And even with all that, I’d expect a tech CEO to just go ‘why not use signal?’ or ‘what threat profile do you think we have?’ (sorry I keep coming back to this, it is just so fucking weird, like ‘everything I know I learned from television shows’ kind of stuff)

Brings to mind the sopranos scene of the two dudes trying to shake down a starbucks or starbucks analogue for protection money

the phones seem to serve no practical purpose. they already have his number and I don’t think you can conclude much from call logs. so suppose they are symbolic. what he would be communicating is that he’s so fully pliant that he is willing to do things there is no possible excuse for, just to suck up to them. the opposite of plausible deniability

Governor Saul Goodsom.

Gavin Newsom has also allegedly been worked behind the scenes to kill pro-transgender legislation; and on his podcast he’s been talking to people like Charlie Kirk and Steve Bannon and teasing anti-trans talking points.

I guess this all makes sense if he’s going to go for a presidential bid: try to appeal to the fascists (it won’t work and also to heck with him) while also laying groundwork for the sort of funding a presidential bid needs.

If I was a Californian CEO and received a burner phone I’d text back “Thanks for the e-waste :<” but maybe that’s why I’m not a CEO.

When this all was revealed his popularity also tanked apparently. Center/left now dislikes him, the right doesn’t trust him. So another point for the ‘don’t move right on human rights you dummies’ brigade.

Asahi Lina posts about not feeling safe anymore. Orange site immediately kills discussion around post.

For personal reasons, I no longer feel safe working on Linux GPU drivers or the Linux graphics ecosystem. I’ve paused work on Apple GPU drivers indefinitely.

I can’t share any more information at this time, so please don’t ask for more details. Thank you.

Whatever has happened there, I hope it will resolve in positive ways for her. Her amazing work on the GPU driver was actually the reason I got into Rust. In 2022 I stumbled across this twitter thread from her and it inspired me to learn Rust – and then it ended up becoming my favourite language, my refuge from C++. Of course I already knew about Rust beforehand, but I had dismissed it, I (wrongly) thought that it’s too similar to C++, and I wanted away from that… That twitter thread made me reconsider and take a closer look. So thankful for that.

Damn, that sucks. Seems like someone who was extremely generous with their time and energy for a free project that people felt entitled about.

This was linked in the HN thread: https://marcan.st/2025/02/resigning-as-asahi-linux-project-lead/

Finished reading that post. Sucks that Linux is such a hostile dev environment. Everything is terrible. Teddy K was on to something

between this, much of the recent outrage wrt rust-in-kernel efforts, and some other events, I’ve pretty rapidly gotten to “some linux kernel devs really just have to fuck off already”

That email gets linked in the marcan post. JFC, the thin blue line? Unironically? Did not know that Linux was a Nazi bar. We need you, Ted!!!

The most generous reading of that email I can pull is that Dr. Greg is an egotistical dipshit who tilts at windmills twenty-four-fucking-seven.

Also, this is pure gut instinct, but it feels like the FOSS community is gonna go through a major contraction/crash pretty soon. I’ve already predicted AI will kneecap adoption of FOSS licenses before, but the culture of FOSS being utterly rancid (not helped by Richard Stallman being the semi-literal Jeffery Epstein of tech (in multiple ways)) definitely isn’t helping pre-existing FOSS projects.

There already is a (legally hilarious apparently) attempt to make some sort of updated open source license. This and the culture, the lack of corporations etc, giving back, and the knowledge that all you do gets fed into the AI maw prob will stifle a lot of open source contributions.

Hell noticing that everything I add to game wikis gets monetized by fandom (abd how shit they are) already soured me on doing normal wiki work, and now with the ai shit it is even worse.

The darvo to try and defend hackernews is quite a touch. Esp as they make it clear how hn is harmful. (Via the kills link)

Here’s my audio/video dispatch about framing tech through conservation of energy to kill the magical thinking of generative ai and the like podcast ep: https://pnc.st/s/faster-and-worse/968a91dd/kill-magic-thinking video ep: https://www.youtube.com/watch?v=NLHmtYWzHz8

Starting things off here with a couple solid sneers of some dipshit automating copyright infringement - one from Reid Southen, and one from Ed-Newton Rex:

lmao he things copyright and watermark are synonyms

Not exactly, he thinks that the watermark is part of the copyrighted image and that removing it is such a transformative intervention that the result should be considered a new, non-copyrighted image.

It takes some extra IQ to act this dumb.

I have no other explanation for a sentence as strange as “The only reason copyrights were the way they were is because tech could remove other variants easily.” He’s talking about how watermarks need to be all over the image and not just a little logo in the corner!

The “legal proof” part is a different argument. His picture is a generated picture so it contains none of the original pixels, it is merely the result of prompting the model with the original picture. Considering the way AI companies have so far successfully acted like they’re shielded from copyright law, he’s not exactly wrong. I would love to see him go to court over it and become extremely wrong in the process though.

His picture is a generated picture so it contains none of the original pixels

Which is so obviously stupid I shouldn’t have to even point it out, but by that logic I could just take any image and lighten/darken every pixel by one unit and get a completely new image with zero pixels corresponding to the original.

Nooo you see unlike your counterexemple, the AI is generating the picture from scratch, moulding noise until it forms the same shapes and colours as the original picture, much like a painter would copy another painting by brushing paint onto a blank canvas which … Oh, that’s illegal too … ? … Oh.

inb4 decades of art forgers apply for pardons

The “legal proof” part is a different argument. His picture is a generated picture so it contains none of the original pixels, it is merely the result of prompting the model with the original picture. Considering the way AI companies have so far successfully acted like they’re shielded from copyright law, he’s not exactly wrong. I would love to see him go to court over it and become extremely wrong in the process though.

It’ll probably set a very bad precedent that fucks up copyright law in various ways (because we can’t have anything nice in this timeline), but I’d like to see him get his ass beaten as well. Thankfully, removing watermarks is already illegal, so the courts can likely nail him on that and call it a day.

“what is the legal proof” brother in javascript, please talk to a lawyer.

E: so many people posting like the past 30 years didnt happen. I know they are not going to go as hard after google as they went after the piratebay but still.

@BlueMonday1984 “This new AI will push watermark innovation” jfc

New watermark technology interacts with increasingly widespread training data poisoning efforts so that if you try and have a commercial model remove it the picture is replaced entirely with dickbutt. Actually can we just infect all AI models so that any output contains hidden a dickbutt?

the future that e/accs want!